Business leaders are witnessing the next evolution of AI – from systems that simply predict or generate content to those that act with autonomy. Agentic AI refers to AI-driven agents that can make decisions and take actions in pursuit of goals, rather than just outputting answers. Unlike basic robotic process automation (RPA) or single-turn chatbots, these agents exhibit adaptability, goal-oriented behavior, and independence. In fact, agentic AI and its agent systems are expected to rank among the top strategic technology trends, poised to transform industries like healthcare, finance, and manufacturing by seamlessly integrating with data platforms and tackling time-consuming jobs (What is Agentic AI? | Salesforce US). CIOs are paying attention because this represents a leap in what automation can do – moving from scripted tasks to proactive “digital workers” capable of handling complex, dynamic workflows.

Agentic AI is often defined as an AI system that can accomplish specific goals with limited human supervision, using multiple AI models (agents) that mimic human decision-making and collaborate in real time (What Is Agentic AI? | IBM). In simpler terms, it’s AI with agency – the ability to act autonomously without constant oversight (What is Agentic AI? | Salesforce US). This is a stark contrast to retrieval-augmented generation (RAG) -based solutions or basic automations. RAG-enhanced chatbots, for example, can fetch knowledge to improve responses, but they still lack autonomy and proactive behavior – they only respond to user queries and won’t initiate actions on their own (Agentic AI vs RAG - A Handy Guide). Traditional automation scripts are similarly passive, following predefined steps and breaking down when conditions change. Agentic AI, on the other hand, can perceive its environment, make context-dependent decisions, and act on its own initiative to achieve a goal (Agentic AI vs RAG - A Handy Guide). This distinction is critical for enterprises: whereas conventional automation delivers efficiency in static, well-defined processes, agentic AI promises agility in dynamic, unpredictable scenarios.

Agentic AI blends advanced reasoning with autonomy, enabling AI “agents” to not just think, but also act within business processes. For enterprises, agentic systems represent a new class of automation that can proactively handle multi-step tasks, coordinate with applications, and adapt to real-time data, far beyond the capabilities of traditional bots.

Why is agentic AI becoming so critical now? Simply put, businesses are drowning in complexity – from volatile supply chains to ever-changing customer expectations – and static automation can’t keep up. Agentic AI offers a way to delegate complex decision-making to intelligent systems that learn and improve over time. Early adopters are already seeing the benefits: agentic AI systems don’t just answer questions or follow scripts, they take action. For example, an IT support agent could not only identify an issue but also open a ticket, gather relevant diagnostics, attempt fixes, and escalate if needed – all autonomously. Such capabilities were impractical before. Today’s advanced AI models provide the reasoning engine for these agents, and new frameworks let them interface with tools and data sources. In short, agentic AI is emerging as a strategic priority because it augments human teams with autonomous counterparts – amplifying productivity, accelerating decision cycles, and unlocking new possibilities for innovation.

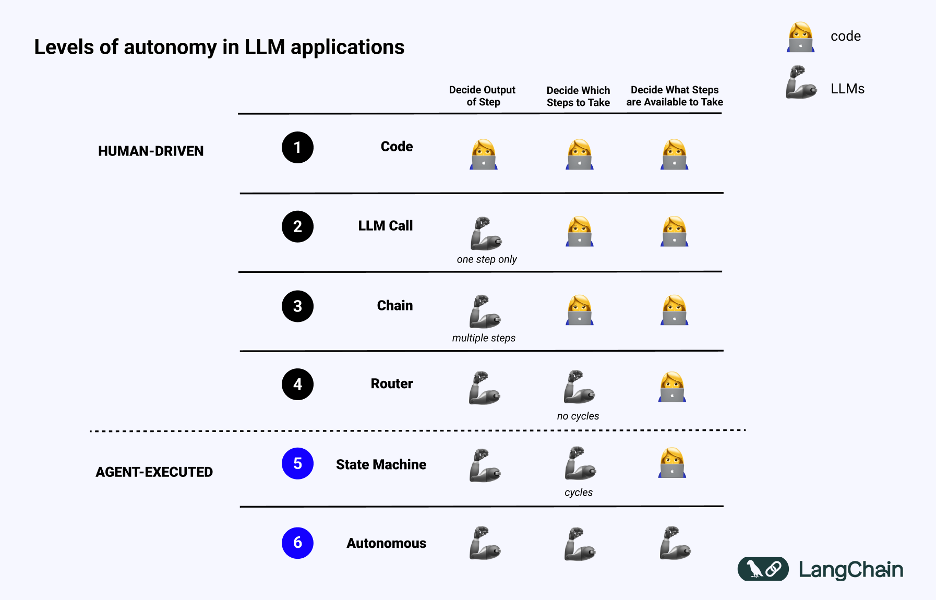

Levels of agentic AI

Not all AI agents are created equal. It’s useful to think in terms of maturity levels or degrees of autonomy that an AI system can have. Below are three levels of agentic AI, ranging from simple tool-based assistants up to fully autonomous agents:

1. Tool use – AI as smart assistants

At the first level, AI agents act as smart assistants to humans, extending our capabilities through tool use. These agents don’t roam entirely on their own; instead, they are user-driven but far more powerful than basic chatbots. They can connect to external tools, APIs, and databases to perform tasks on behalf of the user. For example, a sales analyst’s AI assistant might pull the latest figures from a database, generate a chart in Excel, or draft an email – all in response to a single natural language request. By allowing AI to interact with external resources, we significantly broaden what it can do (Top 4 Agentic AI Design Patterns).

Microsoft’s Copilot and OpenAI’s plug-ins are real-world examples of this pattern: the AI remains under the user’s guidance, but it can search the web, run code, query systems, and then integrate those results into a solution. Essentially, the agent serves as a co-pilot that enhances human productivity – handling the grunt work or complex lookups instantly. The key is that tool-using agents are reactive (they wait for a prompt or command) but augmented with the ability to carry out sophisticated actions once prompted. They differ from basic automation in that they leverage AI reasoning for flexible tool use, rather than executing a rigid script. This level is often a practical starting point for enterprises, as it adds intelligence to existing workflows without ceding full control – the human remains “in the driver’s seat,” while the AI provides the navigation and extra hands.

2. Automatons – Contextual, scripted agents

The next level involves agents that can execute predefined sequences of actions autonomously when certain conditions or contexts are met. Think of these as intelligent automatons: they combine the reliability of scripted workflows with a degree of contextual awareness. Unlike the tool-using assistant, which acts only when invoked by a user, automatons are trigger-driven. They might wake up on an event (e.g. a threshold is crossed, an email arrives, a schedule is due) and then carry out a multi-step procedure without needing a person to initiate each step. For instance, consider an AI agent monitoring inventory levels – when stock of a product falls below a set point, it could automatically place a replenishment order or redistribute stock from another warehouse, following a procedure approved by the business. Such an agent is following a predefined workflow, but it uses AI to make sure each step makes sense in context (e.g. choosing the optimal supplier based on current data).

These workflow-oriented agents are extremely efficient for routine, repeatable processes. However, their autonomy is bounded by the script or playbook we give them. They differ from traditional RPA bots in that they can handle some variability or decision points using AI (for example, understanding an unstructured email to decide which template response to send), but they are not free-form thinkers. It’s a case of “if X happens, do Y,” with the sophistication that X can be a complex condition and Y can involve interacting with multiple systems. It’s important to note the distinction between these workflow agents and truly adaptive agents: workflows follow predefined sequences, whereas true agents are autonomous decision-makers (AI Agents — A Software Engineer’s Overview - DEV Community). In practice, many enterprise “AI automation” solutions today can fall into this automaton category – they intelligently execute tasks like invoice processing, report generation, or user onboarding by stringing together known steps and invoking AI skills at certain points (like data extraction or classification). This level already delivers major efficiency gains, but it still operates within human-defined boundaries – the agent won’t pursue new goals outside its script.

3. Full agentic AI – Autonomous decision-makers

At the highest level, we have fully agentic AI: systems that are autonomous problem-solvers, capable of making and executing decisions with minimal human intervention. These agents don’t just follow a script – they can figure out the script for themselves. Given a goal or objective, a full agentic AI will plan, strategize, and take actions iteratively to accomplish that goal, consulting humans only when absolutely necessary or when configured to do so. This is the realm of AI that can, for example, be told “optimize our delivery routes for tomorrow” and it will gather data, consider constraints like traffic and fuel costs, generate a plan, and perhaps even dispatch instructions to drivers or a fleet management system – all autonomously. Such an agent maintains a long-term agenda, adapts as circumstances change, and can even recover from errors by itself (to a degree). Under the hood, it uses advanced reasoning to evaluate options and set its own sub-goals on the fly. It’s proactive rather than reactive. According to one definition, an agentic AI at this level can initiate actions independently, set goals based on environmental feedback, and optimize towards those objectives with minimal human oversight (The Rise of Agentic AI: Unlocking the Future of Autonomous Intelligence). This is akin to having a junior colleague or an analyst that works tirelessly 24/7, makes decisions in real time, and only occasionally asks for guidance or approval. Of course, with great power comes great responsibility – and risk.

Fully autonomous agents need robust guardrails (more on that later) to ensure they don’t go off the rails. But when done right, they unlock scenarios like autonomous marketing optimization (AI agents analyzing market conditions and previous activities to create and measure new targeted marketing material) and self-optimizing operations where an AI orchestrates many moving parts of a process continuously. It’s the closest thing we have today to an “AI CEO” for a narrow domain – a system that not only thinks but also acts on its decisions to drive outcomes. For enterprises, reaching this level means significant leverage: these agents can handle complexity and scale that would overwhelm purely manual oversight. However, it also requires a mature approach to AI governance, as the human role shifts to one of supervisory control and strategic guidance rather than step-by-step instruction.

Emerging design patterns in agentic AI

As organizations experiment with agentic AI, certain design patterns are emerging as best practices for building effective autonomous systems. These patterns are like architectural blueprints – they show how to structure AI agents and their interactions to achieve reliability, flexibility, and control. Four key patterns are outlined below, each addressing a different aspect of agent behavior and governance.

Event-driven autonomy (trigger-based agents)

One powerful pattern is event-driven autonomy, where AI agents listen for specific triggers or events and then proactively take action. In traditional systems, this concept isn’t new (event-driven architectures have been around for years), but with AI agents the difference is in what actions they decide to take when triggered. An agentic AI built on this pattern operates almost like a vigilant assistant who never sleeps: it monitors streams of events – whether they be database updates, IoT sensor readings, user actions, or time-based schedules – and it springs into action when certain conditions occur. For example, imagine a customer support AI that watches social media mentions of your company; if a negative post with high traction appears, it could automatically create a critical alert, draft a response, or even initiate a refund process for the affected customer, all before a human even notices the issue.

The Event-Driven pattern ensures the AI is situationally aware and not just waiting for commands. Technically, this often involves triggers that launch agent workflows. Common triggers include scheduled times, webhooks (incoming data from other systems), or changes in data records. By leveraging triggers, organizations can create responsive and autonomous AI agents capable of handling complex workflows without constant manual intervention (Triggers - Invicta AI). These agents effectively operate continuously, responding to events and executing tasks around the clock. The result is a big boost in operational efficiency – tasks happen in real-time, exceptions are caught immediately, and the business can be much more proactive. For CIOs, event-driven agentic AI can augment or replace traditional event-response systems with ones that not only detect but also decide and act. Key design considerations here include clearly defining the events of interest, setting thresholds to avoid overreaction, and implementing guardrails so that autonomous actions remain within acceptable bounds.

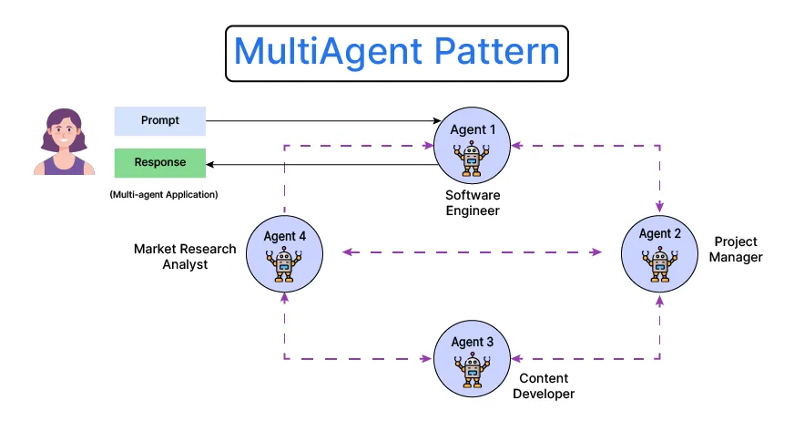

Multi-agent collaboration (swarm intelligence)

The “Multi-Agent Pattern” design (illustrated above) involves multiple specialized AI agents working together, akin to a well-coordinated team. Each agent is assigned a specific role (e.g., data analysis, planning, execution), and they communicate with each other to divide and conquer a complex task. In the diagram, an example multi-agent application uses four agents – a Software Engineer, Project Manager, Content Developer, and Market Research Analyst – that pass information (dotted lines) and results among themselves. This specialization and coordination allow the system to tackle the problem more efficiently than a single agent working alone.

Another emerging pattern is to use multiple agents in collaboration, each with specialized skills or roles, to achieve a goal. Just as in human teams, dividing a complex project among experts can be far more efficient than one generalist trying to do everything. In an agentic AI context, you might have one agent that excels at mathematical reasoning, another at interfacing with an API, another at writing content – and a coordinator agent that breaks the overall goal into subtasks and assigns them accordingly. These collaborative agents work in parallel and exchange information to converge on a solution (Top 4 Agentic AI Design Patterns). For example, consider an AI-driven marketing campaign creator: one agent could analyze market data, a second agent could generate creative copy, a third could forecast performance metrics, and a fourth (the “manager�” agent) could compile the outputs and decide on the final campaign strategy. They operate as an autonomous ensemble with minimal human input.

The benefit of this Multi-Agent pattern is twofold: specialization (each agent can be optimized for a certain type of task or use a certain modality of AI) and redundancy (agents can cross-verify each other’s results or take over tasks if one agent’s approach fails). Research and practical projects have shown that multi-agent systems can solve problems that are too complex for a single agent and can also provide a form of checks-and-balances (one agent can critique or refine another’s output). In essence, multi-agent collaboration is about scaling horizontally – adding more intelligence through additional agents, not just scaling up one model. However, this pattern requires careful orchestration: communication protocols between agents, consensus mechanisms if agents disagree, and efficient task allocation strategies.

Frameworks like crewAI are explicitly designed to orchestrate such multi-agent workflows by having the agents “role-play” together as a crew (What is crewAI? | IBM). When done right, the outcome is impressive – agents with different specialties coordinate to produce results faster and better, mirroring the dynamic of high-performing human teams. As one observer succinctly put it, multiple AIs can work together, each with its own specialty, to deliver better (and faster) results (AI Agents: Agentic Design Patterns). This pattern opens up a modular approach to AI solutions: need a new capability? Just add another agent to the team.

Feedback loops and continuous learning

Autonomous agents must be able to learn from their actions and outcomes; otherwise, they’ll repeat mistakes or fail to adapt to new conditions. This is where the pattern of feedback loops comes in. An agentic AI system is often designed with an internal loop of planning, acting, observing results, and refining its approach. This can happen on two levels: short-term iterative improvement and long-term learning. In the short term, we have techniques like the Reflection pattern – where an agent effectively reviews or critiques its own intermediate output to catch errors or improve the solution before proceeding (AI Agents: Agentic Design Patterns). For instance, an AI coding agent might write a piece of code, then pause and evaluate “Did I handle all edge cases?” and then refine the code based on that reflection. This built-in “self-correct” phase dramatically improves reliability, as the agent doesn’t blindly trust its first answer.

Such self-critique and refinement loops can continue until a certain confidence or quality level is reached. On a longer-term scale, continuous learning means the agent updates its knowledge or strategy based on past experiences. This could likely involve updating its memory store with outcomes in order to adjust the decision-making context. For example, a supply chain agent that frequently overestimates demand might learn from each overestimation and gradually calibrate to improve accuracy over months. Enterprises might implement this via periodic fine-tuning of the AI model with feedback data, or through reinforcement learning where the agent is rewarded for desirable outcomes.

The goal is an agent that gets better over time – the more it works, the more it learns from both successes and failures. Agents can also incorporate human feedback in the loop (a practice OpenAI famously uses to fine-tune ChatGPT with human ratings) to align with desired outputs. A well-designed feedback loop ensures that the agent doesn’t operate in a vacuum but rather has a form of memory and adaptation. Indeed, one of the advantages of agentic AI noted in industry studies is continuous learning and improvement – these systems evolve by learning from past interactions, leading to ongoing performance gains (Agentic AI vs RAG - A Handy Guide). This means that your AI automation doesn’t have to be static software, but instead can be an improving asset. However, continuous learning also demands robust monitoring – you need to verify the agent is learning the right lessons and not drifting from its objectives (hence the need for oversight, which leads to the next pattern).

Human-in-the-loop governance

Even as AI agents become more autonomous, enterprises must maintain oversight and control, especially for high-stakes tasks. The “Human-in-the-Loop” pattern is about designing agentic systems so that critical decisions and policy enforcement involve a human checkpoint or review process. In practice, this might mean an AI agent can execute certain actions on its own (say, issuing a refund under $100), but anything above that amount requires a human manager’s approval. Or an agent preparing a financial report might draft it and only publish after a human signs off. The idea is to have governance mechanisms that keep the AI’s autonomy in check where needed, ensuring compliance with regulations, ethical standards, and business rules. Think of it as autonomy with guardrails.

This pattern can be implemented in various ways: through approval workflows, where the agent pauses and seeks confirmation for specific actions; through audit logs and dashboards, where humans can observe what the agent is doing in real time and intervene if something looks off; and through policy constraints coded into the agent, so it knows its boundaries (for example, an agent might be explicitly forbidden from deleting data or committing to expenditures above a limit).

Many enterprise-grade AI orchestration tools emphasize this mix of AI and human decision nodes. Keeping humans close in the action loops can help companies catch unintended consequences before they escalate – for instance, preventing an enthusiastic marketing AI from overspending the budget, or ensuring a medical AI’s diagnosis is reviewed by a doctor for sanity-check. Human oversight is very important when considering ethics and fairness as well: an HR recruitment agent might rank candidates, but a human can review the list to ensure bias didn’t creep in. It’s important to strike the right balance – too much intervention defeats the purpose of autonomy, while too little can be dangerous.

The best practice is to classify decisions by risk level and design the agent’s workflow accordingly. Low-risk, reversible decisions can be fully automated; high-risk or irreversible decisions should require human confirmation. Over time, as the organization gains trust in the agent (and the agent proves its reliability), the degree of autonomy can expand. Ultimately, human-in-the-loop is about ensuring quality in the agentic decision-making while the system earns trust of users in the areas that it particularly excels.

Agentic AI implementation considerations

For CIOs and technical leaders eager to deploy agentic AI, there are several practical considerations to address. Building autonomous AI systems in an enterprise setting requires thoughtful choices in architecture, integration, and governance. Below are key factors and actionable insights to keep in mind:

Framework and architecture selection: One of the first decisions is choosing how you will build your AI agents. There are emerging frameworks such as crewAI and LangChain that can jump-start development of agentic systems. crewAI is an open-source framework purpose-built for orchestrating multiple autonomous agents working together (What is crewAI? | IBM) – it provides a structure for role-playing agents (e.g., a “Planner” agent, a “Executor” agent) and manages their collaboration as a cohesive “crew.” This can be a strong foundation if your use case naturally breaks into subtasks or requires specialists. LangChain, on the other hand, offers a toolkit for building single or multi-agent applications around LLMs – it makes it easier to connect language models to tools, memory, and multi-step reasoning chains. Many teams have used LangChain to create agents that, for example, can parse user queries, call external APIs or databases, and then format the results, all through a sequence defined in code. If your needs are very domain-specific or you want tighter control, you might opt for a custom architecture. Building from scratch (or extending frameworks) can ensure the agent aligns perfectly with your internal systems and proprietary data. The custom route, however, requires more in-house expertise in AI and software engineering. In either case, modularity is key – design your agent with clear components for the language model (brain), tool interfaces (hands), memory (knowledge), and planner (decision logic). This modular approach, often referred to as an agentic architecture, will make it easier to test and upgrade parts independently. It’s also wise to prototype quickly with one of the frameworks to get a feel for capabilities, then refine or rebuild as needed for production.

Integration and interoperability: Agentic AI will not live in a vacuum; it must play nicely with your existing enterprise systems. Integration is often the toughest part of implementation. Your AI agents should be able to consume and produce data from your databases, ERP, CRM, ITSM, and other platforms. This might involve connecting APIs, leveraging RPA bots for systems without APIs, or even direct database access – all orchestrated by the agent. When planning an agent deployment, map out every system the agent needs to touch or monitor. Ensure the framework you choose supports those connectors, or budget time for custom integration development. Additionally, consider data format and quality – if the agent relies on data from a legacy system, you may need a preprocessing layer to translate that data into something the AI can understand (and vice versa). Interoperability isn’t just technical; it’s also about process integration. An AI agent might perform a task that a human or another system used to do. You’ll need to redefine workflows to insert the agent appropriately. For example, if an AI agent handles level-1 IT support tickets, make sure the ticketing system can route issues to the agent and that downstream escalation rules are adjusted for when the agent cannot solve an issue. Think about scalability too – if your agent needs to handle thousands of events per second, the integration architecture (message queues, event buses, etc.) must support that volume. Finally, don’t forget user integration: how will employees interact with the agent? Possibly via chat interface, email, or a dashboard. Providing an intuitive interface for humans to trigger or converse with agents (and see what they are doing) can greatly improve adoption and trust.

Security, compliance, and ethical governance: With great power comes great responsibility. An autonomous agent with access to enterprise systems and the ability to execute tasks must be governed to prevent unintended consequences, breaches, or compliance violations. Security considerations include robust authentication and authorization – an AI agent should have role-based permissions like any user would, ensuring it can only access the data and perform the actions it’s supposed to. All tool interactions (API calls, database writes, etc.) should be logged and auditable. Using an orchestration layer or BPM engine can help enforce this, as it can serve as a gatekeeper for agent actions. In terms of compliance, you need to embed your business rules and policies into the agent’s decision-making. For example, if there are regulatory constraints (GDPR for data privacy, SOX for financial controls, etc.), the agent should be aware of them. One way is to encode these as hard constraints – e.g., the agent cannot send data to an external service unless it’s on an approved list. Another way is to have checkpoints: as discussed, human-in-the-loop for compliance-sensitive decisions. A practical tip is to incorporate a “policy engine” that the agent checks with if it’s about to do something potentially sensitive. For instance, an AI in HR must adhere to fairness and bias guidelines when shortlisting candidates – you might programmatically enforce that the agent’s recommendations meet diversity criteria. A published example noted how integrating business logic directly into the agent’s workflow ensures it stays aligned with organizational rules – for instance, a financial AI agent could be made to follow risk assessment guidelines before approving transactions (Deep dive into Agentic AI stack - Express Computer). On the ethical front, consider the implications of the agent’s decisions. Ensure transparency: it should be possible to explain why the agent did something (hence the importance of keeping logs and perhaps having the agent produce rationale statements). Bias is another concern – if your agent’s model was trained on biased data, its autonomous decisions could reflect that. Mitigation strategies include doing bias testing on the agent’s outputs, incorporating bias-correction in its logic, or periodically retraining on more diverse data. Testing in general is critical: simulate scenarios (including edge cases) in a sandbox to see how the agent behaves. This can reveal if the agent might do something undesirable under certain conditions, so you can add safeguards. Finally, develop a clear governance plan: Who oversees the agent’s operation? How will incidents be handled if the agent makes a mistake? Having a designated “AI controller” role or committee can be useful, especially during the early phases of deployment. Remember, an agentic AI is not a set-and-forget system – treat it as a new kind of employee that needs onboarding, monitoring, and continuous training. With proper governance, you can enjoy the benefits of autonomy while minimizing risks.

The bottom line

Agentic AI represents a new frontier in enterprise technology – one that holds the promise of dramatically increased automation, efficiency, and innovation. By evolving beyond static workflows and simple chatbots, autonomous AI agents can handle complex tasks, adapt to changing conditions, and collaborate in ways that were previously impossible for software. For enterprises, investing in agentic AI now is an investment in staying competitive and resilient in the years ahead. Organizations that successfully deploy these agents stand to benefit from faster decision-making, 24/7 operational coverage, and the ability to scale expertise across the organization. In contrast, those who stick solely to traditional automation may find themselves falling behind, as they’re limited to pre-defined processes in a world that rewards adaptability. We’re already seeing early movers reap rewards – from financial firms saving millions through AI-driven fraud prevention to manufacturers significantly reducing downtime via predictive maintenance. As agentic AI matures, its strategic importance will only grow, effectively becoming a catalyst for the next wave of digital transformation. According to industry analysis, agentic AI offers advantages not just in efficiency, but also in improved decision quality and customer experience, giving adopters a clear competitive edge by enabling levels of automation and insight that older systems couldn’t achieve (The Rise of Agentic AI: Unlocking the Future of Autonomous Intelligence).

For CIOs and technical business stakeholders, the key takeaway is that agentic AI is no longer experimental – it’s enterprise-ready. The building blocks (powerful AI models, robust frameworks, integration tools) are in place today. The path to implementation should be approached thoughtfully, but the barriers to entry have lowered significantly over the past couple of years. By starting now, even with pilot projects, organizations can begin to develop the skills and infrastructure needed to harness agentic systems effectively. This isn’t a one-quarter quick win project; it’s building a foundation for the next decade where AI will be an active participant in business operations.

In summary, agentic AI can be a game-changer for enterprises willing to embrace it, but success depends on marrying technical innovation with governance and strategy. As you consider bringing autonomous agents into your business, here are some actionable next steps and final insights:

Start with a targeted pilot: Identify a use case that is high-impact but bounded (for example, automating level-1 IT support tickets, or handling after-hours customer inquiries). Pilot an agentic solution there to demonstrate value and learn lessons on a small scale. Early wins will build momentum and support for broader adoption.

Leverage existing frameworks and expertise: Don’t reinvent the wheel. Explore frameworks like crewAI or LangChain to accelerate development, and consider partnerships or consulting with experts who have done agent implementations. This can shorten your time to value and help avoid pitfalls. Simultaneously, educate your team – train developers and architects on this new paradigm so you build internal capability.

Prioritize integration early: Ensure your agent will have access to the data and tools it needs. Engage your IT and data teams to set up the necessary APIs, connectors, and data pipelines. The sooner your pilot agent can start ingesting real enterprise data and performing real actions, the quicker you’ll uncover integration challenges (and you will find some). Solve those in the pilot phase.

Establish governance and oversight structures: Before an agent ever goes live in production, define how you will monitor it and control it. Set clear policies for what the agent is allowed to do autonomously versus what requires human sign-off. Create an oversight role (or committee) that will review the agent’s decisions at the start, and put in place metrics and alerting – e.g., if the agent’s performance deviates or it encounters an unknown situation, humans are notified. Basically, plan for a gradual increase in autonomy as trust grows.

Scale up with iteration: Use the learnings from your pilot to iterate on design and then expand to other use cases. Treat each deployment as an opportunity to refine your agent framework and share knowledge across the organization. Over time, you might develop a library of agent components (planners, QA checkers, tool adapters) that can be reused for different business processes. Aim for an enterprise-wide agentic AI strategy, where multiple departments leverage agents under a unified governance umbrella, rather than isolated one-off projects.

By following these steps, CIOs can guide their organizations to safely unlock the power of agentic AI. The journey is certainly a transformative one – we are, in many ways, teaching machines not just to help us, but to act on our behalf. When aligned with business goals and values, this capability becomes a force multiplier for human talent. In the coming years, having a fleet of reliable AI agents could very well be as standard as having an ERP system or a CRM. Enterprises that recognize this trend early and build competence in agentic AI will be poised to leap ahead, operating with a level of speed and intelligence that will set them apart in their industries.

In closing, the rise of agentic AI is an invitation for businesses to reimagine what can be automated – not just tasks, but entire workflows and decision processes. It’s an exciting new chapter in the AI revolution, and the time to start writing your organization’s part of that story is now.

Contact Us

Let's talk!

We're ready to help turn your biggest challenges into your biggest advantages.

Searching for a new career?

View job openings