Large Language Models (LLMs) have transformed the digital landscape, emerging as versatile tools that drive innovation across numerous sectors. Their adaptability and proficiency in understanding and generating human-like text have led to diverse use cases, from creative writing and research assistance to business analytics and customer service. This article delves into the patterns of use for LLMs, exploring their evolution, applications, and implications for future technology.

The evolution of LLMs

The journey of LLMs has been marked by rapid technological progress. Early natural language processing systems were rule-based and limited in scope. In contrast, modern LLMs, powered by deep learning techniques, are trained on vast datasets that include literature, news articles, web pages, and more. This expansive training enables them to capture the nuances of human language, making them effective in tasks that require context, inference, and creativity.

The evolution of LLMs has been driven not only by advances in computational power and algorithms but also by the growing need for systems that can process and generate natural language in real-time. As industries embraced digital transformation, the demand for AI that could understand, summarize, and create content led to the development and widespread adoption of these models.

A shift in chatbot evolution: From basic chatbots to fully autonomous agents

One notable pattern in the use of LLMs is the evolution of chatbot technologies:

Initial chatbots (basic Q&A): Early implementations focused on scripted interactions with users, handling simple questions with predefined answers. While functional for basic customer service, these models lacked adaptability, often frustrating users when faced with unexpected or complex questions.

RAG chatbots with company IP: To enhance intelligent and context awareness, organizations integrated Retrieval-Augmented Generation (RAG). This approach combines the conversational strengths of LLMs with real-time proprietary business knowledge, enabling chatbots to fetch, process, and generate responses using internal databases, knowledge repositories, and APIs—delivering more precise and business-specific insights.

Agentic AI systems (autonomous AI agents): The next major shift is toward agentic AI systems that no longer rely on users to manually request information but instead autonomously retrieve data, generate insights, and take action based on business needs. These systems are becoming integral for decision support, task automation, and workflow optimization. Organizations gain intelligent agents capable of optimizing operations with limited or no human intervention.

Diverse applications across sectors

Content creation and creative writing: LLMs assist in drafting reports, articles, and marketing copy. Many businesses are integrating AI-driven content generation directly into their workflows.

Research and data analysis: Companies leverage AI for automated knowledge retrieval, summarizing vast datasets, and extracting key business insights. LLMs act as research copilots that drastically reduce time spent on manual information gathering.

Customer support and virtual assistance: LLMs drive chatbots and virtual assistants, automating FAQs, troubleshooting, and customer inquiries. They improve user experience while reducing operational costs.

Software development and code generation: LLMs assist developers by suggesting code completions, debugging errors, and even generating boilerplate code. This streamlines engineering workflows and enhances productivity.

Business process automation (agentic AI): Beyond chatbots, LLMs are now being used to automate complex workflows—generating reports, triggering business processes, and even making operational recommendations without manual intervention.

Personalized customer experience & marketing automation: AI-driven personalization tailors marketing campaigns, product recommendations, and customer interactions to individual preferences.

Implementation guide for technical stakeholders

For technical decision-makers looking to integrate AI into their company, understanding how to deploy and scale LLMs is crucial. Below is a step-by-step implementation framework:

Strategic alignment and identify business use cases

Determine key areas where AI can add the most value—customer support, knowledge retrieval, automation, or decision-making.

Prioritize use cases that have a high return on investment and minimal disruption to existing workflows.

Choose between pre-trained models vs. fine-tuned models

Pre-trained models (e.g., OpenAI’s GPT-4, Anthropic’s Claude) are fast to deploy but may require customization to align with business-specific data.

Fine-tuned models allow greater customization but require additional training data and infrastructure.

Decide on an AI integration approach

Chatbot or knowledge assistant? → Implement a RAG-based chatbot that retrieves information from internal documents and databases.

Process automation? → Deploy an autonomous agent that triggers workflows and actions based on real-time data.

Decision support? → Use LLMs to analyze structured and unstructured data, providing insights and recommendations.

Architect the deployment strategy

On-premises vs. cloud: Decide whether to deploy in a cloud-based environment (AWS, Azure, GCP) or keep it on-premises for security and compliance reasons.

Inference infrastructure: Use APIs (e.g., OpenAI, Azure OpenAI) for quick deployment or host open-source models (e.g., Llama, Mistral) for greater control.

Retrieval-augmented generation (RAG): Enhance the LLM by connecting it to company databases, knowledge bases, and APIs for dynamic, real-time responses.

Implement data governance & security

Access control: Define role-based permissions for AI-generated content.

Bias & compliance checks: Regularly audit AI responses to ensure fairness and accuracy.

Data privacy: Ensure LLMs do not store sensitive or proprietary company data.

Build an AI feedback loop for continuous improvement

Human-in-the-loop mechanism: Allow employees to review AI responses and provide feedback.

Model monitoring: Implement logging and analytics to track AI performance and improve accuracy over time.

Iterative refinement: Use reinforcement learning or continual fine-tuning to adapt AI outputs based on real-world usage.

Adoption & change management

Developer enablement: Internal documentation, Gen AI CoE (Center of Excellence).

Stakeholder Education: Training sessions for business & technical teams on capabilities and limitations.

Scaling Governance: Define policies for responsible AI scaling within the enterprise.

Innovation and Strategy Optimization: Generative AI is transforming business strategy and innovation by enhancing decision-making, market analysis, and competitive positioning. By leveraging advanced AI models, organizations can analyze complex data, forecast trends, and optimize strategic planning—all while maintaining SMEs in the loop, ensuring human oversight and expertise guide AI-driven insights.

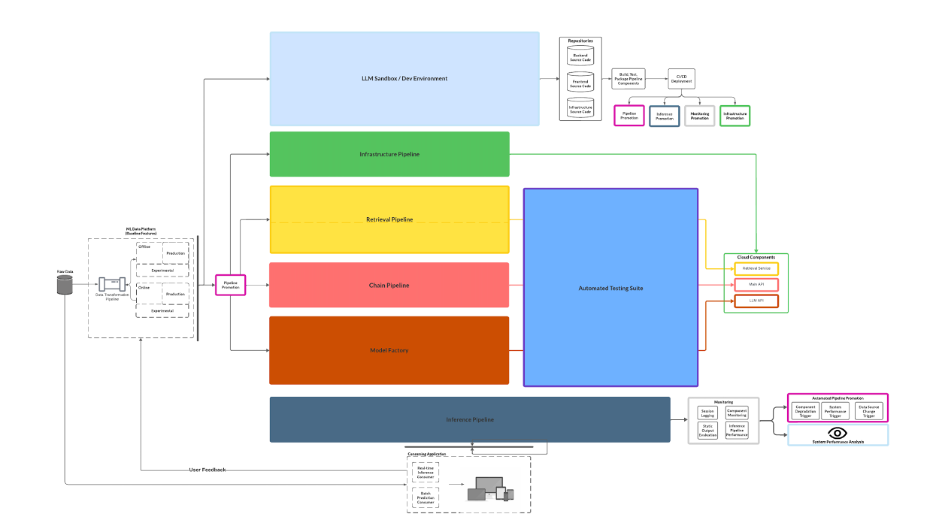

LLMOps reference architecture: Modular & extensible

To ensure rapid adaptability to evolving AI technologies, a modular LLMOps reference architecture is crucial. By decoupling major functions—such as data retrieval, model orchestration, and user feedback—into distinct pipelines, teams can update or replace components without overhauling the entire system.

Below is a conceptual breakdown of such an architecture:

Data retrieval layer

Data connectors: Integrate with various data sources (databases, APIs, file storage, SaaS platforms).

Data storage: Leverage specialized tools (e.g., Elasticsearch, Pinecone, FAISS) to index and store embeddings in a vector database, enabling high-speed similarity searches and efficient retrieval of relevant

information

Data preprocessing pipeline: Clean, transform, and enrich data before feeding it into the LLM.

Model orchestration layer

LLM inference engine: The core engine that handles requests to one or multiple LLMs (e.g., GPT-4, open-source models).

RAG mechanism: Integrates with the Data Retrieval Layer to fetch relevant context in real-time.

Agentic workflow: Logic that decides which tasks to perform autonomously, including multi-step workflows (e.g., retrieving additional data, calling APIs, or summarizing results).

Policy & governance: Enforces rules around model usage, data handling, and compliance (e.g., content filtering, PII redaction).

User interaction layer

Front-end Applications: Chat interfaces, web portals, or third-party integrations where users interact with the AI.

API gateway: Provides a standardized interface to the orchestration layer, making it easy to plug in new front-end clients or integrate with other services.

Authentication & authorization: Ensures only permitted users and systems can access the LLM or data.

Feedback & monitoring layer

User feedback loop: Allows end-users or domain experts to rate, correct, or refine AI responses.

Observability & logging: Collects metrics on latency, accuracy, user satisfaction, and usage patterns.

Automated retraining pipeline: Feeds user feedback and new data into the next iteration of the model, either through fine-tuning or reinforcement learning.

DevOps & MLOps integration

CI/CD pipeline: Automates code changes, infrastructure updates, and model deployments.

Version control: Tracks changes to data pipelines, model configurations, and code repositories.

Rollbacks & blue-green deployments: Enables safe testing of new model versions or components before full rollout.

Benefits of a modular approach

Rapid updates: Replace or upgrade the LLM engine, vector database, or front-end interface independently.

Scalability: Scale each layer (e.g., data retrieval, inference, or feedback processing) independently as usage grows.

Flexibility: Easily integrate new technologies—like alternative LLMs, advanced vector search, or novel data processing tools.

Resilience: Failure in one pipeline (e.g., data retrieval) doesn’t bring down the entire system, thanks to decoupled components.

Looking to the future: The rise of AI agents

The future of LLMs is shifting toward fully autonomous AI agents—systems that:

Adaptive learning & self-optimization: Instead of manually prompting an LLM, users will receive proactive AI-driven insights.

Multi-agent collaboration: Future AI agents will collaborate with other AI systems and human users, dynamically delegating tasks, sharing insights, and optimizing workflows across departments..

Act as real-time copilots: AI will provide employees with the right information at the right time, enhancing productivity without requiring explicit requests.

Context-aware decision making: AI will analyze real-time data streams, business context, and historical trends to predict needs and take proactive actions without human intervention.

By moving from chatbots → RAG-based assistants → autonomous AI agents, businesses can unlock new levels of efficiency and automation.

Final thoughts: Turning AI into a competitive advantage

Understanding LLM use patterns and implementing a modular LLMOps architecture empowers technical leaders to make informed AI adoption decisions. Whether deploying chatbots, integrating RAG-based knowledge retrieval, or embracing full AI automation, the key is strategic implementation and continuous improvement.

For companies that effectively integrate LLMs, AI evolves from a mere tool to a transformative strategic asset, driving innovation, enhancing decision-making, and automating operations in ways previously unimaginable.

Take action today: Identify your highest-impact AI use case and start building the future of your organization. By leveraging a decoupled, modular architecture, you’ll be ready to adapt to the rapid changes in AI technologies—maintaining a competitive edge now and well into the future.

Contact Us

Let's talk!

We're ready to help turn your biggest challenges into your biggest advantages.

Searching for a new career?

View job openings