Queues are a very common piece of infrastructure and vital in technology to enable scalability and high throughput activity without blocking other activities. It’s as simple as it sounds, first in first out or, FIFO for short. As the queue grows the last item added to the queue typically must wait longer to be processed. Just like someone waiting in line to order food, the first one in line gets to order first. Microsoft Azure Service Bus (ASB) is Microsoft’s premier enterprise level messaging technology that uses this principle of FIFO. ASB allows for scalable cloud based applications to be built on a fast-reliable messaging infrastructure. On a recent client engagement, I was tasked with implementing an integration to ASB. I learned quite a few valuable lessons about error handling and best practices along the way.

Topics & Queues: A Quick Primer

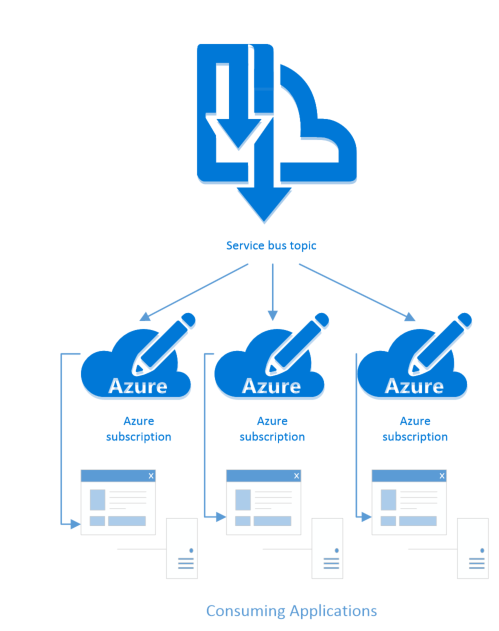

ASB has two core pieces of functionality. The first being nothing more than a FIFO queue and the second is much like a queue but it’s called a topic. ASB queues always have two users involved-a producer and a consumer. The producer produces messages and puts them into the queue, while the consumer periodically polls for messages and consumes them. Creating and using queues in ASB is incredibly simple. Topics are like queues except they allow for a publish-subscribe model: instead of only one consumer per message, many consumers can subscribe to the same topic and receive their own copy of each message. Users subscribe to each topic by using a subscription. Think of a topic as a top-level queue with multiple subscriptions that live within that queue. Each subscription is a uniquely named virtual queue that receives the broadcasted messages from the topic it is registered to (see diagram). For more information head on over to this Microsoft Docs article to learn about implementing topics into a solution.

Topics and queues provide two ways for consumers to receive messages. These two receiving methods are a setting on the topic or queue named, “ReceiveMode”. The ReceiveMode property can either be set to PeekLock or ReceiveAndDelete. PeekLock is a two-step operation that allows the consumer to “peek” at the message, then make a request to the queue or topic to complete the processing of that message. When the complete call is made, the message is removed from the subscription from which it was received. If ReceiveAndDelete mode is specified, the message is both received and deleted off the topic or queue, in a single operation, without having to call an explicit complete. PeekLock is appropriate for applications that cannot tolerate missing a message. As such, it is the default for all ASB queues and topics.

Dead Letter Queues

Unbeknown to me when I first started working with Azure Service Bus, queues and topics each, have a sub-queue called the “Dead Letter Queue” (DLQ). To help explain this concept, picture this common anecdote. Your favorite local neighborhood delivery man has tried to deliver a package to you for almost a week. He needs you to sign for the package but, you aren’t home to accept it. As any normal functioning human being, he gets frustrated and leaves a passive-aggressive note asking you to “pick up your package at your earliest convenience.” When you receive the note, you call the local post office and discuss when you can pick up the package.

This failed package delivery is much like how dead letter queues work in Azure Service Bus, except for one key difference. When messages cannot be delivered to the consumer in Azure Service Bus, the consumer does not get notified that the message was not received. The message simply gets put into the DLQ. Obviously, this would be hugely problematic to an enterprise application that is relying on receiving every message that was sent. There are five different ways a message can be automatically sent to the DLQ. I am only going to discuss the first and most common way messages end up in the DLQ wasteland (for the other reasons and more info hop to it here). Every queue and subscription has a MaxDeliveryCount property, the default of this property is set to 10. When messages are consumed under a PeekLock ReceiveMode they have a lock duration setting that can expire. If the consuming application fails to call “Complete” on the message before the lock expires the DeliveryCount property on the message is incremented. Consuming applications can also call the Abandon method on the message which will increment the DeliveryCount of the message. When the DeliveryCount of the message exceeds the MaxDeliveryCount of the queue or topic, the message ends up in the DLQ.

Poison messages tend to be the cause of much of this headache. A poison message is a message that is sent to the queue or topic that the consuming application cannot process correctly. Maybe some data in the body of the message is not the correct type that the consuming application is expecting, thus causing a deserialization error to occur. This would cause the message to hit its lock duration (think processing timeout) and the DeliveryCount of the message to be incremented. Repeat that process nine more times and you’ve hit the MaxDeliveryCount of the queue or topic. As a general best practice, it’s a good idea to handle all exceptions in the message processing code so the lock duration is never reached. Call Message.Abandon whenever an exception happens during the processing of the message, so the message can be released back to the queue/subscription and the DeliveryCount is incremented. Default lock duration on messages is set to 60 seconds. As you can imagine if each time the error was thrown you had to wait 60 seconds for the next retry to occur, you would greatly slow your application down.

private void ProcessMessage(BrokeredMessage message)

{

try

{

//Process message code

}

catch (Exception ex)

{

message.Abandon();

}

}

Messages stay in the DLQ until they are either consumed or they expire (ExpiresAtUtc property on message). Unfortunately for most of us, it’s not usually OK to miss messages. Enterprise applications rely on processing every order and message that gets placed in a system. To make sure your application doesn’t miss a message when integrating with ASB, you must be sure to code for DLQs. The following approaches will help keep your ASB applications humming along.

Approach 1: DLQ Monitoring, Logging & Alerting Service

Whether you are the producer or the consumer of the content, you can monitor the DLQ to make sure it stays clear. Pick an interval (15 minutes, every hour) and have a service or task that checks the DLQ for messages. Send out alerts to your team as well as any other key stakeholders, to allow for them to easily investigate why messages ended up in the DLQ. The following is a simple code sample from a monitoring service I built into our ASB consuming application for a subscription to a topic.

public List<DeadLetterQueueMessage> MonitorDeadLetterQueue(string TopicName)

{

List<DeadLetterQueueMessage> messagesFromDeadLetterQueue = new List<DeadLetterQueueMessage>();

ServiceBusConnectionStringBuilder builder = new ServiceBusConnectionStringBuilder(AzureBusEndPointReceiveConnectionString);

MessagingFactory factory = MessagingFactory.CreateFromConnectionString(builder.ToString());

MessageReceiver deadLetterClient = factory.CreateMessageReceiver(SubscriptionClient.FormatDeadLetterPath(TopicName, AzureBusSubscriptionName));

bool queueStillHasMessages = true;

while (queueStillHasMessages)

{

BrokeredMessage msg = deadLetterClient.Receive();

if (msg != null)

{

DeadLetterQueueMessage deadLetterQueueMessage = new DeadLetterQueueMessage

{

JSONPayload = new StreamReader(msg.GetBody<Stream>(), Encoding.UTF8).ReadToEnd(),

MessageId = msg.MessageId,

DeliveryCount = msg.DeliveryCount,

DeadLetterErrorDescription = msg.Properties[“DeadLetterErrorDescription“].ToString(),

DeadLetterReason = msg.Properties[“DeadLetterReason“].ToString()

};

messagesFromDeadLetterQueue.Add(deadLetterQueueMessage);

msg.Complete();

}

else

{

queueStillHasMessages = false;

}

}

return messagesFromDeadLetterQueue;

}

Since the DLQ exists on all topics and queues it has a special naming convention. Microsoft makes it easy to access the DLQ via the SubscriptionClient.FormatDeadLetterPath() method. This method takes the topic and subscription name and tacks on a “/$DeadLetterQueue” to the end of the subscription name. The monitoring method above loops over the messages in the DLQ and builds a list of a custom class I built called “DeadLetterQueueMessage” to capture the pertinent data from these messages. The messages (BrokeredMessage) are collected out of the DLQ as often as the interval you set and can be logged to a log database, log service, or simply a file on a file share.

JSONPayload is the body of the message. You can never have too much information when it comes to logging an issue!

The MessageId is simply the unique identifier attached to the message.

DeliveryCount is the property we have already discussed and simply shows how many times the message was attempted to be consumed.

DeadLetterErrorDescription and DeadLetterReason are two properties that only get added to the BrokeredMessage when the message ends up in the DLQ. The reason tells the consumer why the message was moved to the DLQ and the description provides a little more context around the reason.

After logging these dead-lettered messages, we email a summary email to our team containing information about each of these messages in the DLQ. This alerting and logging service has helped us understand what action to take when messages show up in the DLQ.

Approach 2: Re-Enqueue Messages From DLQ

As the consuming application, you can try to re-enqueue messages that have wound up in the DLQ. Granted, if these messages are poison messages and the discrepancy in the message and the consuming application has not been resolved the same result will occur. However, for other instances re-enqueuing messages that wind up in the DLQ can be a viable approach. When you send these messages back to the topic, beware: You are sending a message back to the topic so all subscriptions will receive the message again. I would only recommend this approach if your application is the only subscribing application to that topic.

Just like the monitoring service, this method would need to be run on a schedule. The service gets each message in the DLQ, reads the body of the message, and creates a brand new BrokeredMessage. The new messages get added to the list to send while the old DLQ message gets cleared out of the DLQ so this service does not pick it up again later. After the list of messages in the DLQ is built, we use the MessageSender class to iterate through each message and send them back to the topic. When working with the Azure Service Bus, having knowledge of the dead-letter queue can save you a lot of time and grief. If you have any other best practices, approaches, or general tips on dealing with dead-letter queues or Service Bus, feel free to post a comment tweet us @CrederaMSFT , or contact us online. If you’re looking for some more insight into using Azure Service Bus or other Microsoft Azure service offerings, please reach out! We would love to hear from you.

Contact Us

Ready to achieve your vision? We're here to help.

We'd love to start a conversation. Fill out the form and we'll connect you with the right person.

Searching for a new career?

View job openings